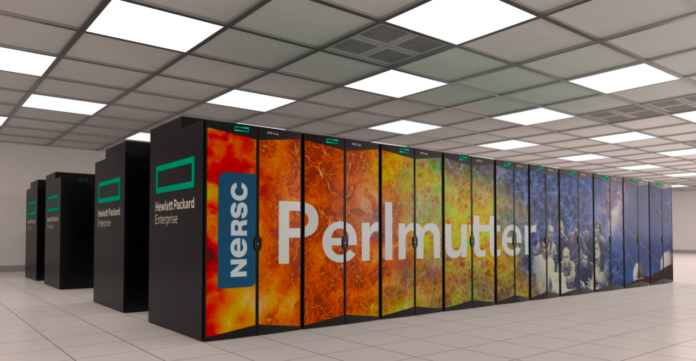

The United States’ National Energy Research Scientific Computing Center (NERSC) in California has switched on “Perlmutter,” a supercomputer that as touted will deliver nearly four exaflops of AI performance for more than 7,000 researchers.

Dion Harris, senior product marketing manager at NVIDIA, said such numbers make Perlmutter the world’s fastest system on the 16- and 32-bit mixed-precision math AI uses. Also, such performance does not even include a second phase coming later this year to the system based at Lawrence Berkeley National Lab, which runs NERSC for the US Department of Energy’s Office of Science.

Harris said more than two dozen applications are getting ready to be among the first to go through the 6,159 NVIDIA A100 Tensore Core GPUs in Perlmutter, the largest A100-powered system in the world.

“People are exploring larger and larger neural-network models and there’s a demand for access to more powerful resources, so Perlmutter with its A100 GPUs, all-flash file system and streaming data capabilities is well timed to meet this need for AI,” said Wahid Bhimji, acting lead for NERSC’s data and analytics services group.

These applications are aimed at — among other things — advancing science in astrophysics and climate science and helping piece together a 3D map of the universe and probe subatomic interactions for green energy sources.

In one project, the supercomputer will will process data from the Dark Energy Spectroscopic Instrument (DESI), a kind of cosmic camera that can capture as many as 5,000 galaxies in a single exposure.

Dark energy was largely discovered through the 2011 Nobel Prize-winning work of astrophysicist Saul Perlmutter, who is still active at Berkeley Lab and will help dedicate the new supercomputer named for himself.

Researchers need the speed of the supercomputer’s GPUs to capture dozens of exposures from one night to know where to point DESI the next night. Preparing a year’s worth of the data for publication would take weeks or months on prior systems, but Perlmutter should help them accomplish the task in as little as a few days.

Traditional supercomputers can barely handle the math required to generate simulations of a few atoms over a few nanoseconds with programs such as Quantum Espresso. But by combining their highly accurate simulations with machine learning, scientists can study more atoms over longer stretches of time.

“In the past it was impossible to do fully atomistic simulations of big systems like battery interfaces, but now scientists plan to use Perlmutter to do just that,” said Brandon Cook, an applications performance specialist at NERSC who is helping researchers launch such projects.