The Great Barrier Reef, which is located off the coast of Queensland, Australia, has been a concern for conservationists for some time now, given the effects of climate change.

The rising temperature of the Earth causes a phenomenon called coral bleaching, which is bad news for marine life.

Therefore, not-for-profit organisations like Citizens of the Great Barrier Reef are doubling efforts to save one of the seven natural wonders of the world. They are doing this through initiatives like the Great Reef Census, an annual survey of the far reaches of the 2,300-kilometre coral reef system.

However, researchers are having difficulties in gathering and processing data, despite the huge amount of human support pouring in worldwide.

According to Professor Peter Mumby, a professorial research fellow at the University of Queensland, time is of the essence, especially that there were four bleaching events or heat waves in the past seven years.

“For example, in 2016, about half of the corals in the Great Barrier Reef bleached, which is a symptom of stress. It doesn’t necessarily mean that when a coral bleaches, that it’s going to die. If the sea temperature returns to normal, then the corals can recover. But with really severe heat waves, you can see large areas die out,” the academic explained during a media briefing hosted by the Citizens of the Great Barrier Reef, the University of Queensland, and Dell Technologies.

“From a conservation or management perspective, you can’t actually stop heat waves. There are people investigating technologies to help address that, but really, what you can do is try to improve the capacity of the reef to recover once there’s been a bleaching event like this,” he added.

All hands on deck

Realising that it’s already the 11th hour, Citizens of the Great Barrier Reef quickly embarked on collecting data from coral reefs, via the Great Reef Census.

Scientists, divers, tourists, fishers, and skippers on a community research flotilla capture images, which are then analysed by citizen scientists globally to help locate key source reefs important for coral recovery.

Going into the project, the initiative was beset with three major data challenges:

- How to get people to collect the data.

- How to get the collection uploaded.

- How to handle the massive volume of data in a timely fashion.

As such, the organisation partnered with Dell technologies to solve these obstacles. Dell designed and implemented a strategy for real-time data collection at sea, which uses a Dell edge solution deployed on multiple watercraft. These, in turn, automatically upload data directly to servers upon return to land, via a mobile network.

During its first run in 2020, the flotilla managed to capture 13,000 images. The following year, the images grew to 42,000. Data from the census helped the Great Barrier Reef Marine Authority prioritise control sites.

Having solved the first two data challenges, the nonprofit was now faced with the last one — how to analyse the data faster.

“How do we create a system that could engage citizen scientists all over the world? In the analysis, we did try a new one, an analysis platform, but it was complex, time consuming, tricky to run, and a bit glitchy. So that kind of left us with, okay, this is something we really need to solve,” said Andy Ridley, CEO of Citizens of the Great Barrier Reef.

To this end, Dell had to leverage deep learning technology.

“We knew that the citizen scientists in the first phase were spending up to seven minutes per photo — uploading them, detecting them, selecting all the parameters of the photo, choosing what was refilled, and what wasn’t, and so forth. We knew that this was taking a certain amount of time, and the accuracy levels varied from a citizen scientist using it, to a researcher that was able to be a lot more accurate in how they were looking at reef images,” said Danny Elmarji, Vice President, Presales, APJ, Dell Technologies.

Although more images and participation from volunteers is desired, Dell had to solve the bottlenecks in the equation.

Deep learning technology

Going into the nuts and bolts of deep learning, how exactly does it work to solve the data challenges encountered by the Great Barrier Reef conservationists?

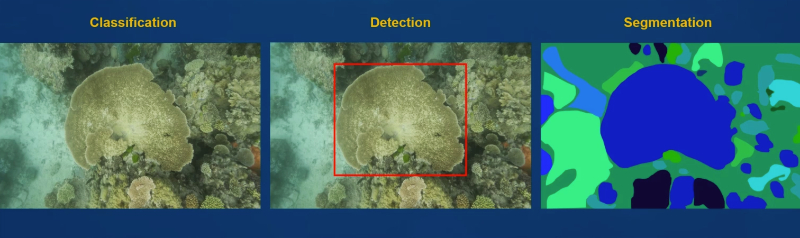

“We are using segmentation algorithms for analysing the images for (the) census,” revealed Aruna Kolluru, Chief Technologist, Emerging Technologies, Dell Technologies APJ. “In segmentation, every pixel in the image is analysed and classified to say which category it belongs (to).

According to Kolluru, there are two different segmentation methods: instant segmentation, where every instance of a category can be identified differently; and semantic segmentation, which is what the project is using. This method identifies all the pixels with the same category as one segment.

Meanwhile, the infrastructure platform that supports Dell’s deep learning solution has two different elements: one for training, and one for inferencing.

“(The) cluster for training is leveraging Dell PowerEdge servers and GPU acceleration for training. We also have PowerScale (i.e., a software-defined Dell storage product), which eliminates the I/O bottlenecks, and provides both high-performance concurrency and scalability for both training and validation of the AI models. On this platform, we trained different deep learning models, unit segment, deep learning, deep lab, and others,” Kolluru shared.

For inferencing, PowerScale is again utilised, with each server running with VMware to do multiple AI inferences at the same time.

“With every pixel in an image, we started to classify the reef borders, and in under 10 seconds, we used a human to verify the accuracy of the label. What happens now is Dell Technologies is working between this human and machine partnership, to take what was close to 144 different categories of reef organisms, and really dividing them into subcategories. We were able to make a shortlist of 13 categories, and repeatedly refine them until there were only really five critical categories. That’s going from 144 to five, making it really easy for the humans to verify what was (part of the) reef or not,” Elmarji elaborated.

Project continuity

Now in its third year, the Great Reef Census kicks off in October 2022. Through the partnership between Citizens of the Great Barrier Reef, University of Queensland, and Dell, the following had been achieved so far:

- Improved citizen experience.

- Massive improvement in quality of citizen scientist analysis.

- Improving trust in the value of citizen science.

- Highlights value of machine-human partnerships.

- Acceleration of research.

- Improved conservation effort.

- Constantly improving process.

- Scalable technology.

In terms of scalability, Elmarji argued that deep learning has an advantage in dealing with data challenges.

“The AI doesn’t get bored, whereas citizen scientists can get bored. Imagine uploading all those images and having to repeatedly go through, and stencil out what’s reef and what’s not reef. As humans, our attention spans can drift on occasion, but AI doesn’t. It allowed us to apply something that was purposely built almost for an AI engine to drive it. So, the more data we get, the more accurate the model gets. But this is a really good example of this kind of symbiotic relationship that we believe is going to coexist for some time between humans and machines,” he remarked.

Meanwhile, Mumby emphasised the importance of extending the topic of reef conservation beyond the government and the scientific community.

“There are so many dedicated people who are doing a lot, but this is just another way in which citizens can contribute towards an important pathway of building the reef’s resilience. We just can’t do it alone; we have to work in partnership,” he noted.

As for future use cases, Ridley is banking on the evolution of the internet and smartphones in the next two to three years, as a catalyst for their conservation model to be applicable on a global scale.

“We are trialling this new conservation operating system on the Great Barrier Reef but ultimately, we’re building an infrastructure that can be scaled to reefs and marine ecosystems around the world. Our ambition is to share our 21st century conservation model with reef communities around the world, opening up the possibility for reef conservation to scale to a level we have not seen before,” Ridley said.