Data is growing at an unprecedented pace. According to the Dell Technologies’ recently released 2020 Global Data Protection Index (GDPI) snapshot survey, organisations in Asia Pacific and Japan on average are managing 64% more data than they were a year ago. The study, conducted by the research firm Vanson Bourne, found that in 2019 IT decision makers in this region managed an average of 13.31 petabytes of data – a staggering 693% increase since 2016.

The exponential data growth combined with increasing data value also poses fresh and often, unforeseen risks. Across the region, 84% of the respondents reported experiencing a disruptive event in the past 12 months (such as downtime or data loss) – up from 80% in the previous GDPI survey. The average annual costs of data loss in this 12-month period exceeded $1.3M USD – higher than other regions as well as the global average of $1.01M USD, while the costs of downtime surged by 61%, $794k USD in 2019 vs almost $495k USD in 2018.

The study also revealed a growing acknowledgement of the challenges surrounding data protection — and more broadly speaking, data management. The majority of IT decision makers indicated a lack of confidence in their solutions to help them recover data following a cyber-attack, adhere to compliance regulations, meet application service levels and prepare them for future data protection business requirements. It’s no surprise, then, that over 2/3 of organisations are concerned that they will experience disruption over the next 12 months.

The proliferation of data also presents an opportunity for organisations to optimise their business revenues and competitive positioning. By considering three key data management strategies for the upcoming financial year, they can fuel innovation, drive new revenue streams and provide deep insight into the needs of their customers, partners and stakeholders.

Partner with a single data protection provider

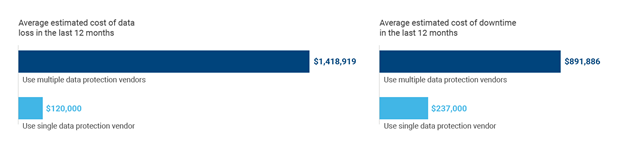

The findings highlighted that 83% of the survey respondents in APJ reported that they were using solutions from multiple data protection vendors. The irony is that these organisations are likely investing more in time, money and staffing resources to protect their data and applications and yet their annual data loss and downtime costs are significantly higher than organisations working with a single data protection vendor.

When identifying the most effective data protection strategy for one’s business, consider that multiple vendor solutions often leads to increased IT complexity. The use of disparate management tools combined with the need to rely on multiple vendors for service and support can make day-to-day data protection management very challenging. This is especially true during a downtime event.

Investing in a single data protection partner with an extensive portfolio of solutions to protect data in all its forms (on-premise, in remote locations and in the cloud) is a surefire way to reduce complexity and mitigate the risk of downtime during an outage.

Prioritise ease of management across locations

Besides growing exponentially, data is also more distributed than ever before, residing across multiple public clouds, in the core data center and out across hundreds and even thousands of edge locations.

Organisations would do well by ensuring that their data is protected and recoverable, no matter where it resides. Therefore, it is essential for organisations to adopt data protection solutions that are easy to manage, easy to scale while delivering the resiliency and provide global visibility to ensure data is protected across environments including on-premise, in edge locations as well as across hybrid, multi-cloud infrastructure.

Look for scale and automation of data management solutions

Data management is central to an organisation and its growth. Therefore, a solution should provide the global scale that businesses need as their application workloads and data volumes exponentially increase in the coming years.

Moreover, to minimise disruption and combat data protection complexity, organisations need simple, reliable, efficient, scalable and more automated solutions for protecting applications and data regardless of the platform (physical, virtual, containers, cloud-native, SaaS, etc.).

As the volume, variety and velocity of data grows at a rapid pace and workloads span from the distributed core, to the edge and to the cloud, organisations must develop robust data management strategies to combat increased security threats and cyber-attacks.